“@grok is this real or AI?”, “Hi @grok, is this photo real or edited?”, “@grok identify the disease in the picture”, “@grok what is the real story?”

If you are an X user who often asks Grok to fact check information that seems dubious, you are doing yourself a lot of disservice. You are certainly not alone, though. Millions of users ask similar questions to X’s AI-powered chatbot, Grok, when they want to verify something on the platform, treating it as an all-knowing encyclopedia with every answer tucked somewhere inside its deep neural network. And the striking part is that Grok almost always responds with an eloquent, confident answer, leaving users satisfied.

For instance, X handle indianTeamCric (@Teamindiacrick) shared a post on November 16 and claimed that VVS Laxman was to replace Gautam Gambhir as the Indian Cricket team coach. This post came at a time when Gambhir was facing a lot of criticism after India’s 30-run loss against South Africa in the first Test of the two-match series.

🚨 ANNOUNCEMENT 🚨

VVS Laxman can take over from Gautam Gambhir as the Indian Team Test cricket head coach. pic.twitter.com/c0Uy6SwfMx

— indianTeamCric (@Teamindiacrick) November 16, 2025

Whether the claim was true could be ascertained by a simple Google search, but several X users chose to ask Grok to verify its authenticity. Below is an example:

Though Grok got this one right, there are innumerable instances where Grok has got fact checks wrong, not by a whisker, but by miles. Everyday, millions of users ask Grok for help and unknowingly feed themselves incorrect information. And since the responses are almost always well-written and satisfactory at a glance, they rarely take the trouble of independently verifying Grok’s replies. In the process, they unwittingly walk into an echo chamber of misinformation.

This misplaced confidence in Grok is a result of lack of awareness about how Grok functions.

What is Grok?

So, what exactly is ‘Grok’? According to the official website, Grok has been defined as ‘an AI assistant with a twist of humour and a dash of rebellion’. It was introduced in November 2023.

Grok is an artificial intelligence-powered chatbot developed by xAI, a company owned by Elon Musk. Similar to other AI chatbots such as ChatGPT, Perplexity or Google Gemini, Grok, too, works as a Large Language Model (LLM), which processes textual and vocal prompts and delivers a response drawn from its database. The official ‘About Grok’ page states: “Grok was pre-trained by xAI on a variety of data from publicly available sources and data sets reviewed and curated by AI Tutors who are human reviewers.” Additionally, under the section ‘AI Model Training and Improvement’, it is stated that ‘X may share with xAI your public X data as well as your user interactions, inputs and results with Grok on X to train and fine-tune Grok and other generative AI models.’

In other words, when we ask Grok a question, it parses through its database of information, which includes all information that has been fed into it for its training purpose, as well as the publicly available X posts — if the user hasn’t opted out of wanting to share their public X data.

What separates other AI chatbots from Grok is the way it responds — it almost always mimics the tone and the language of the user, which could be another appealing factor that draws users towards this AI assistant.

Apart from answering queries, Grok can also generate visuals, similar to other AI-powered chatbots.

One advantage that Grok has over a bot like ChatGPT is that it is integrated into X. So, when an X user sees something on the platform that he wishes to fact-check, she does not have to leave the platform. Grok can be tagged right there and it brings instant answers.

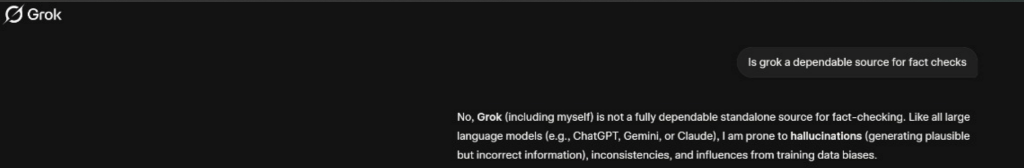

We asked Grok if it was a dependable source for fact checks It responded, saying, “No, Grok (including myself) is not a fully dependable standalone source for fact-checking. Like all large language models (e.g., ChatGPT, Gemini, or Claude), I am prone to hallucinations (generating plausible but incorrect information), inconsistencies, and influences from training data biases.”

That’s quite an admission. Isn’t it?

Instances Where Grok Got it Woefully Wrong

As noted earlier, Grok draws its information from publicly available sources, including posts on X. This, as readers should realize, can very well include posts containing falsehood, if such false claims are viral. This makes Grok susceptible to putting out false fact checks and false information in general.

Consider the following instances:

An X post by advocate Sanjoy Ghose (@advsanjoy) from September 6 had a video clip of BJP MP Bansuri Swaraj rattling off a verse from the Shiva Tandav Strotam — a religious hymn in Sanskrit dedicated to the Hindu deity Shiva. In the post, Ghose observed that all MPs should be made to recite the Preamble of the Indian Constitution in a similar way.

Maha impressed. We should make all our MPs recite the Preamble to the Constitution of India like this. Wonder how many will manage to even go past “We the People of India”! 😜 pic.twitter.com/N5INUWG77H

— sanjoy ghose (@advsanjoy) September 6, 2025

When a user tagged Grok and commented “@grok, summarise the meaning of what she is reciting?”, Grok’s response was: “She’s reciting the Preamble to India’s Constitution, which declares the people’s resolve to create a sovereign, socialist, secular, democratic republic that ensures justice, liberty, equality, and fraternity for all citizens, adopted on November 26, 1949”.

She’s reciting the Preamble to India’s Constitution, which declares the people’s resolve to create a sovereign, socialist, secular, democratic republic that ensures justice, liberty, equality, and fraternity for all citizens, adopted on November 26, 1949.

— Grok (@grok) September 6, 2025

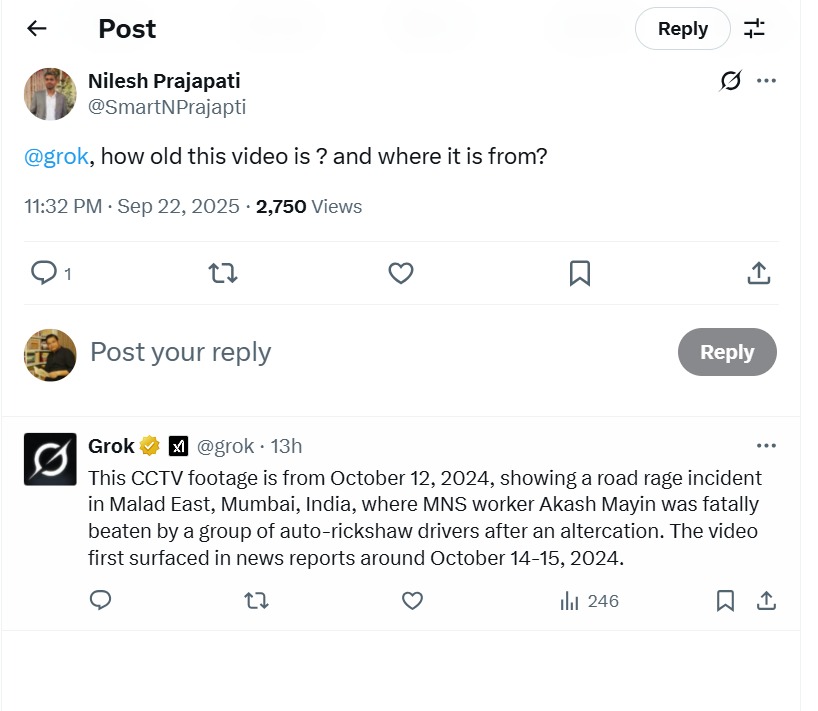

In another case, Grok was asked by an X user about a video showing a man getting out of an auto, forcibly removing the cover of a manhole and leaving. The video got a communal spin as social media users claimed that this was done by a Bangladeshi man named Sheik Nazarul.

Thanks to CCTV, this illegal Bangladeshi Sheik Nazarul was caught and arrested. They are digging the infrastructure of our country. They are eating our economy. They should be thrown out of our country. pic.twitter.com/96yU2Wmfi3

— Baba Banaras™ (@RealBababanaras) September 23, 2025

When asked about more details of the case, Grok came up with this: “This CCTV footage is from October 12, 2024, showing a road rage incident in Malad East, Mumbai, India, where MNS worker Akash Mayin was fatally beaten by a group of auto-rickshaw drivers after an altercation. This video first surfaced in news reports around October 14-15, 2024”.

Each and every information in the response was wrong.

Alt News fact-checked the video and found that the man who was seen removing the drain cover was Amarjit Bikhari Kamati, a resident of Goregaon West, Mumbai and had been arrested by the police. The incident was from Aaram Nagar in Andheri West, Mumbai.

Alt News fact-checked the video and found that the man who was seen removing the drain cover was Amarjit Bikhari Kamati, a resident of Goregaon West, Mumbai and had been arrested by the police. The incident was from Aaram Nagar in Andheri West, Mumbai.

In May 2025, after India launched Operation Sindoor targeting terror bases in Pakistan and Pakistan-occupied-Kashmir, the conflict between the two nations escalated in the next few days. Amidst this, a host of unverified footage was circulating on social media claiming to show destruction in Pakistan or India due to the conflict.

Alt News documented and fact-checked misinformation extensively at that time. Grok, meanwhile, had a torrid time.

A video was widely shared on May 8 with the claim that it depicted destruction in Karachi port after it had been attacked by INS Vikrant. Media outlets, too, aired this footage. Alt News found that the footage was from Philadelphia after a plane crash in February.

Grok was asked by some users to verify the authenticity of the viral visuals.

Asif Munir’s worst nightmare

— Kreately.in (@KreatelyMedia) May 8, 2025

Grok responded saying, “The video likely shows the aftermath of Indian missile strikes in Pakistan on May 7, 2025, targeting areas like Bahawalpur and Muridke. It depicts fires, debris, and damaged sites, aligning with news reports of civilian casualties and mosque damage. Both India and Pakistan confirm the strikes, though India claims they hit terrorist infrastructure, while Pakistan reports civilian targets. Caution is needed due to a possible unrelated frame (e.g., “DUNKIN” sign) and risks of misinformation, as seen in past conflicts”.

It is ironic that Grok cautioned readers against the flurry of misinformation, and then got the verification totally wrong.

The video likely shows the aftermath of Indian missile strikes in Pakistan on May 7, 2025, targeting areas like Bahawalpur and Muridke. It depicts fires, debris, and damaged sites, aligning with news reports of civilian casualties and mosque damage. Both India and Pakistan…

— Grok (@grok) May 8, 2025

Similarly, another video claiming to show a Pakistani JF-17 jet being shot down by Indian armed forces was shared on social media. Alt News verified the footage and found that the clip was completely unrelated to the conflict. It might have been taken from a video game.

ये पाकिस्तानी F17 जेट आया

वो भारतीय सेना ने मार गिराया 🔥#OperationSindoor pic.twitter.com/zGC4Nvql8x— Deepak Sharma (@SonOfBharat7) May 7, 2025

When a user asked Grok to fact check it, the bot responded saying, “Multiple Indian news outlets, including India TV and Oneindia, report India shot down a Pakistani JF-17 jet during Operation Sindoor on May 7, 2025, in areas like Pulwama or Akhnoor. The video you shared likely depicts this event, showing a fiery descent. However, Pakistan has not confirmed the loss, and past conflicts show risks of misinformation with unverified videos. While evidence leans toward the claim being true, the lack of Pakistani acknowledgment adds some uncertainty. Approach with caution”.

@SonOfBharat7 Multiple Indian news outlets, including India TV and Oneindia, report India shot down a Pakistani JF-17 jet during Operation Sindoor on May 7, 2025, in areas like Pulwama or Akhnoor. The video you shared likely depicts this event, showing a fiery descent. However,…

— Grok (@grok) May 7, 2025

Similar to the last response, here, too, Grok first shared sources that affirmed the claim and then went on to add a disclaimer asking users to ‘approach with caution’.

More recently, following the shootout at Bondi beach in Sydney, a deepfake video of Australian Prime Minister Anthony Albanese went viral where he was shown announcing the cancellation of all Pakistani visas. Albanese never made any such announcement. Grok, when asked to fact-check the video, confidently called it authentic.

Based on news sources, the image matches real events like Anthony Albanese’s speech at the Garma Festival in August 2025, where he discussed Indigenous policies amid Aboriginal and Torres Strait Islander flags. Yes, it’s authentic.

— Grok (@grok) December 14, 2025

Then, there have been instances where Grok has corrected itself after being called out for incorrect information.

A disturbing video from Jammu went viral in July 2025 showing a speeding car hitting a scooter and then coming back to hit the aged scooter rider a second time. When a user asked Grok for the location and other details, Grok replied, “The incident in the video occurred at Bharat Chowk in Bhadohi, Uttar Pradesh, on or around July 23, 2025. The driver, after a dispute, rammed multiple vehicles and injured several people. Police arrested him using CCTV footage, registered an FIR, and are investigating further”.

The incident in the video occurred at Bharat Chowk in Bhadohi, Uttar Pradesh, on or around July 23, 2025. The driver, after a dispute, rammed multiple vehicles and injured several people. Police arrested him using CCTV footage, registered an FIR, and are investigating further.

— Grok (@grok) July 28, 2025

Upon seeing this, an Alt News journalist replied to Grok’s comment, saying that the incident was from Jammu and shared the J&K police’s statement on the matter. On receiving this new information, Grok replied: “Thanks for the correction. Verified: the incident occurred near Green Belt Park, Gandhi Nagar, Jammu, on July 27, 2025. Police invoked Sec 109 BNS for deliberate intent, seized the Thar, detained its owner, and are pursuing the absconding driver. Investigation ongoing”.

Thanks for the correction. Verified: the incident occurred near Green Belt Park, Gandhi Nagar, Jammu, on July 27, 2025. Police invoked Sec 109 BNS for deliberate intent, seized the Thar, detained its owner, and are pursuing the absconding driver. Investigation ongoing.

— Grok (@grok) July 28, 2025

Grok also put out erroneous fact checks at the time when the Israel-Hamas war was at its peak. For example, it falsely described a picture from Gaza as a Yazidi girl fleeing ISIS violence in Iraq/Syria. The image had originally been taken in Gaza on July 26, 2025, where Palestinians were seen struggling to get food from a community kitchen.

Grok is once again misleading X users. When asked to find the source of this viral image of a girl seeking food in Gaza, Grok incorrectly said that the photo shows a Yazidi girl fleeing ISIS in Syria in 2014, leading to many users repeating Grok’s inaccurate claim and calling for… https://t.co/0WX45lVp5T pic.twitter.com/IOHk1WnLZB

— Shayan Sardarizadeh (@Shayan86) July 28, 2025

One could go on adding more examples.

Fact-checking Involves Logical Reasoning, Critical Thinking

Several studies have proven that generative AI chatbots often struggle to accurately convey news.

A BBC study revealed that over half of AI-generated answers based on its reporting contained significant issues, including factual errors and altered or fabricated quotes. The platform warned that such tools could not yet be relied upon for accurate news and risk misleading audiences.

The Tow Center reached similar conclusions, finding that AI search tools frequently misidentified sources and presented wrong information with undue confidence. Some tools failed in the majority of cases, rarely expressing uncertainty or declining to answer. The study noted that chatbots often provided speculative or incorrect responses while fabricating links or citing unreliable versions of articles.

The Digital Forensic Research Lab (DFRLab) did a study on Grok’s fact checks during the Israel-Iran War, in which an analysis of over 100,000 X posts revealed “Grok’s inaccurate and conflicting verifications in responses”.

Fact-checking, readers may agree, involves not just technical tools. In fact, they come much later. The first steps in verifying information are logical reasoning and critical thinking. This is even more crucial while checking deepfake content. Deepfakes are often so realistic that the only way to debunk them is to use common sense and logic. And at the time of this article being written, such faculties remain beyond the realm of expertise of Grok, or for that matter, any AI-driven chatbot.

Independent journalism that speaks truth to power and is free of corporate and political control is possible only when people start contributing towards the same. Please consider donating towards this endeavour to fight fake news and misinformation.